S1B3: Three Experimentation Pitfalls Experimentation - A Story to Avoid them

This week marked our annual Product Barcamp at XING. While around 40 Product Managers gathered in the New Work Harbour, another 40 or so joined online. As the days get shorter and the cozy winter season is about to start, I sat down to read a story to my fellow peers. It’s a story about obstacles, failure, and even war from past experimentation endeavors of mine.

📖 Story Time!

📖 Story Time!

The story kick-started a valuable discussion about how we Product Managers approach experimentation and in particular AB-testing at XING. It concluded into a new working group that will try to progress on this topic on a regular basis.

Maybe it will spark new thoughts, questions, or impulses for you too? Here it is.

Three Experimentation Pitfalls Experimentation

Pretty much exactly 5 years ago, in the fall of 2016, I worked in the UK on a game called PokerStars. With millions of daily active, paying users, it’s an enormous business.

I was in charge of growing new users, making sure they get to create an account, fund it, understand how to play the game and find a game that suits them so they get the most out of their time and money and come back again in the future.

After doing some research and sparring ideas with stakeholders, one opportunity stood out as the one to pursue further. The idea was to insert a visually engaging screen that features a welcome offer for new users.

Everyone was on board. It was a no-brainer. No questions. But those were just opinions, right. I needed scientific evidence!

So, I got to sit down and prepared the JIRA tickets needed in order to get an AB Test started. Old, obviously shitty screen versus shiny, obviously much better screen. It went into the magic of engineering and as we approached a state of readiness, I got to spend more and more time with my analysts. So far, so good?

Hmm no. From there, it went downhill. We had multiple hypotheses and couldn’t agree on the most important one. With that, we weren’t on the same page regarding which KPI should be the deciding factor. We couldn’t even agree on whether this should really be an AB test. Why? Because we didn’t play by the same rules.

The Analysts goal was to simply answer a question in a clean and accurate way. He wanted to test a hypothesis. My goal was to prove that my grand idea is as grand as I think it was. And I decided to make it an AB test because people know that the results are non-discussable. They are proven by science. In a way, I was asking for any positive number that I can use in a way to make this look like a success. I was asking for absolution and didn’t really care much about the data. What should I have done instead?

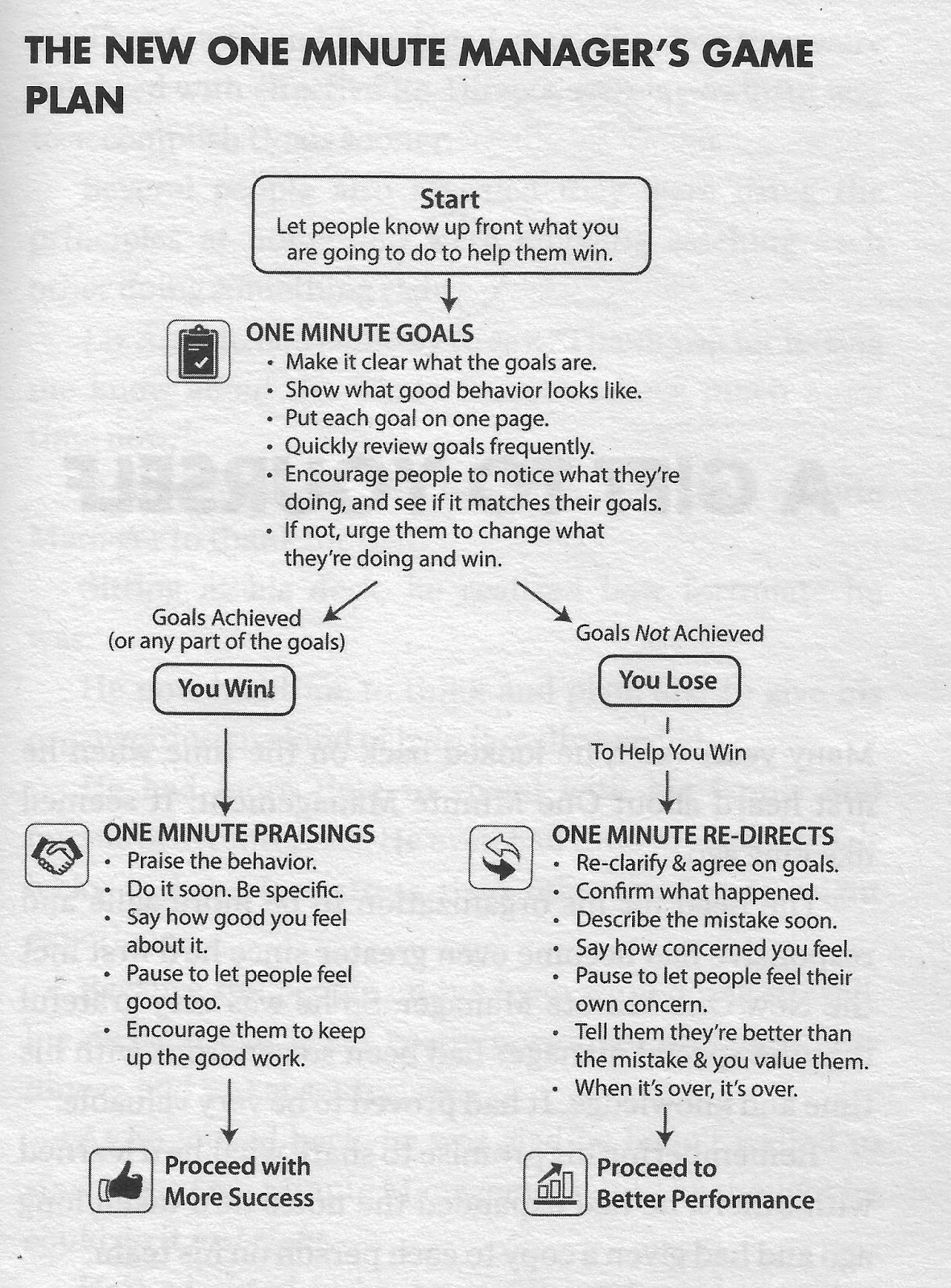

At the beginning of every experiment, make sure that there is a clear hypothesis to answer. Be honest with yourself that it’s not about producing some evidence to prove your point. There are easier ways to get that! Evaluate together with your analysts and user insights colleagues what methods to use. If you decide on one, agree on the rules and stick to them. And only let data drive your decisions if your data basis allows it. If it’s broken or you don’t trust it. Invest in getting it right. Finally, be an advocate for your data to make sure everyone understands and uses it in the right way for the right reasons.

Alright, let’s continue with the story because it got worse. The Analyst and I continued to argue and fight over all sorts of details. We were still at war over the nature of the AB test when the implementation hit production. When we checked it, we noticed that the group split wasn’t as random as we thought it would be (because of a technical race condition, I spare you details) and we couldn’t be 100% sure about which user saw which variant.

That didn’t help and as a Product Owner, it was my fault. I saw the analysts as a service provider. I get the stuff built and they will check the numbers if it was successful I thought. That’s not how it works.

The Analyst should have been involved from the very beginning. Even though they might not always join your standups or refinement and planning sessions, Analysts are part of your team. What we talk about as Product Owners might be fascinating to us, but really is Kindergarten for them. They are the experts and if you don’t team up with Analysts, involve them early and most importantly trust and value their contribution, you won’t get the results you are looking for. So, instead of creating an analysis ticket in JIRA alongside your implementation ticket, meet at the discovery stage and continue to collaborate throughout the process. Finally, remember that we are all after the same goal. The questions they ask or the assumptions they challenge are there to ensure better progress towards the goal of creating great products.

Alright, let’s continue with the story because it got worse. Well, we got the issues sorted with some more iterations. But because I was just a little too motivated, I sneaked in a few more variants making it an ABCD test. The Analyst was speechless and went back to his desk. A few days later, he came back with the calculated runtime: 2 months. Of course, I couldn’t believe that. “That long? Can’t you do better than that?” I asked. The analyst bluntly said, “I can’t. But you can certainly do better”. And he was absolutely right.

When we Product Managers demand complex experiments then the result will be more complexity in engineering, planning, design, analysis and so on which not only increases time and effort but also the chance for mistakes.

Less complexity means less time, less effort, fewer mistakes.

If you get results faster, then you can bring value to users faster. It doesn’t always have to be an ABCD test, maybe it’s better to test A versus B, if B wins, get it live and test it against C, and so on. But have that discussion on the best experiment design with your analysts. They will provide you with the best option. Follow it.

Alright, let’s summarise the key messages we had so far:

- Play by the Rules & Advocate for your Data

- Team-up with your Analysts

- Simplify Always

Final Message: Fortune Favours the Brave Have you read Ryan Holidays great new book “Courage is Calling” yet? It’s brilliant.

We should lead by example and focus on the right process. If you feel the pressure to cut corners or maybe to look the other way when there is something wrong with the test design or data then be brave and take a stance. Because you want to base decisions on valid answers driven by data, driven by our users. Everything else is a waste of time.

To wrap things up and give you some closure on how the story ends, I’m happy to report a happy end. The ABCD Test went live and was a wild success. But that’s not the happy end.

My son Lasse was born in August 2017. On my first day in the office, the Analyst welcomed me with the biggest smile and a lovely gift for my newborn baby. And it wasn’t much of a surprise for me. Because we dealt through our conflicts and we got a chance to understand each other’s perspectives. It made us an awesome PO-Analyst duo and we had a much smoother sailing from there.

🏈 NFL Update: We are past week 9 already! While the Cowboys play a great season (6-2), my 49ers (3-5) are last in NFC West. Mixed feelings!

Follow the sign to find Growth

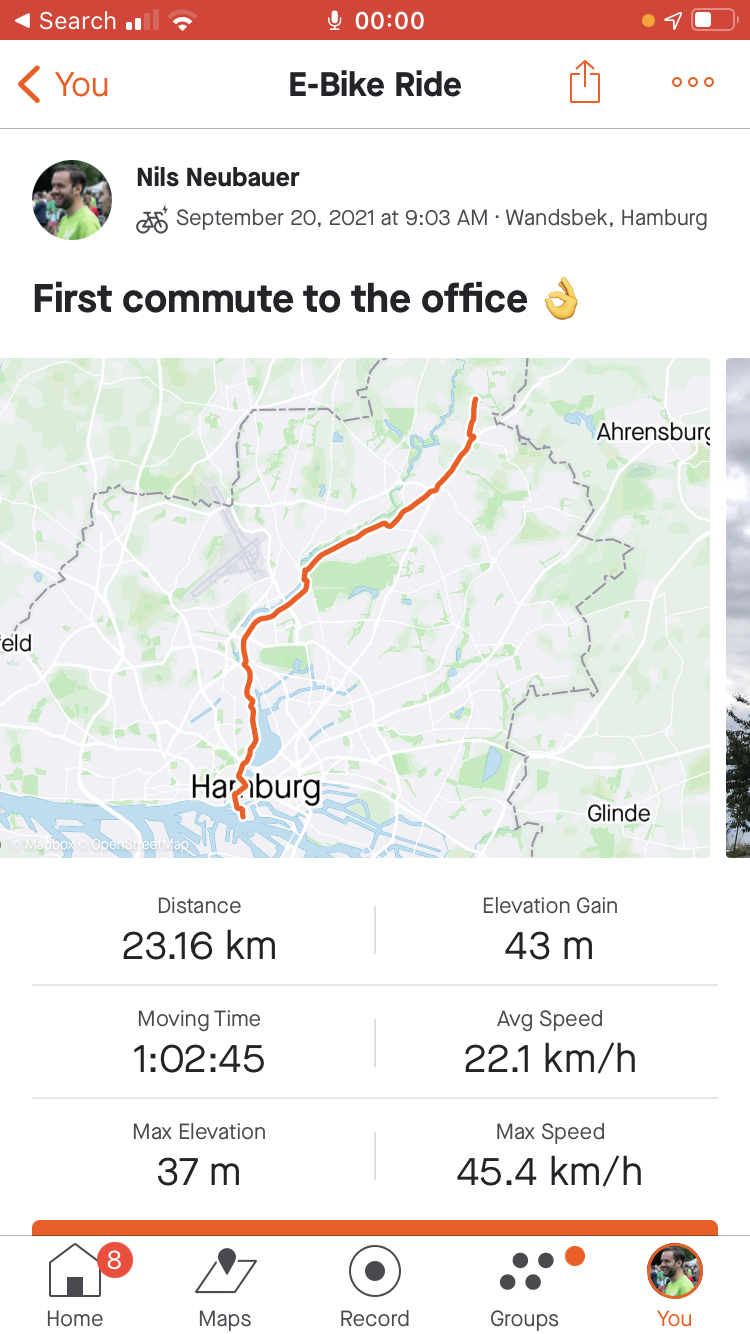

Follow the sign to find Growth I didn’t even know I can cycle at 45 km/h!

I didn’t even know I can cycle at 45 km/h! Our VW Bus “Kalle” ready to board the 🇩🇰 Ferry

Our VW Bus “Kalle” ready to board the 🇩🇰 Ferry Do you spot our 4 colleagues from 🇵🇹 Porto who joined remotely?

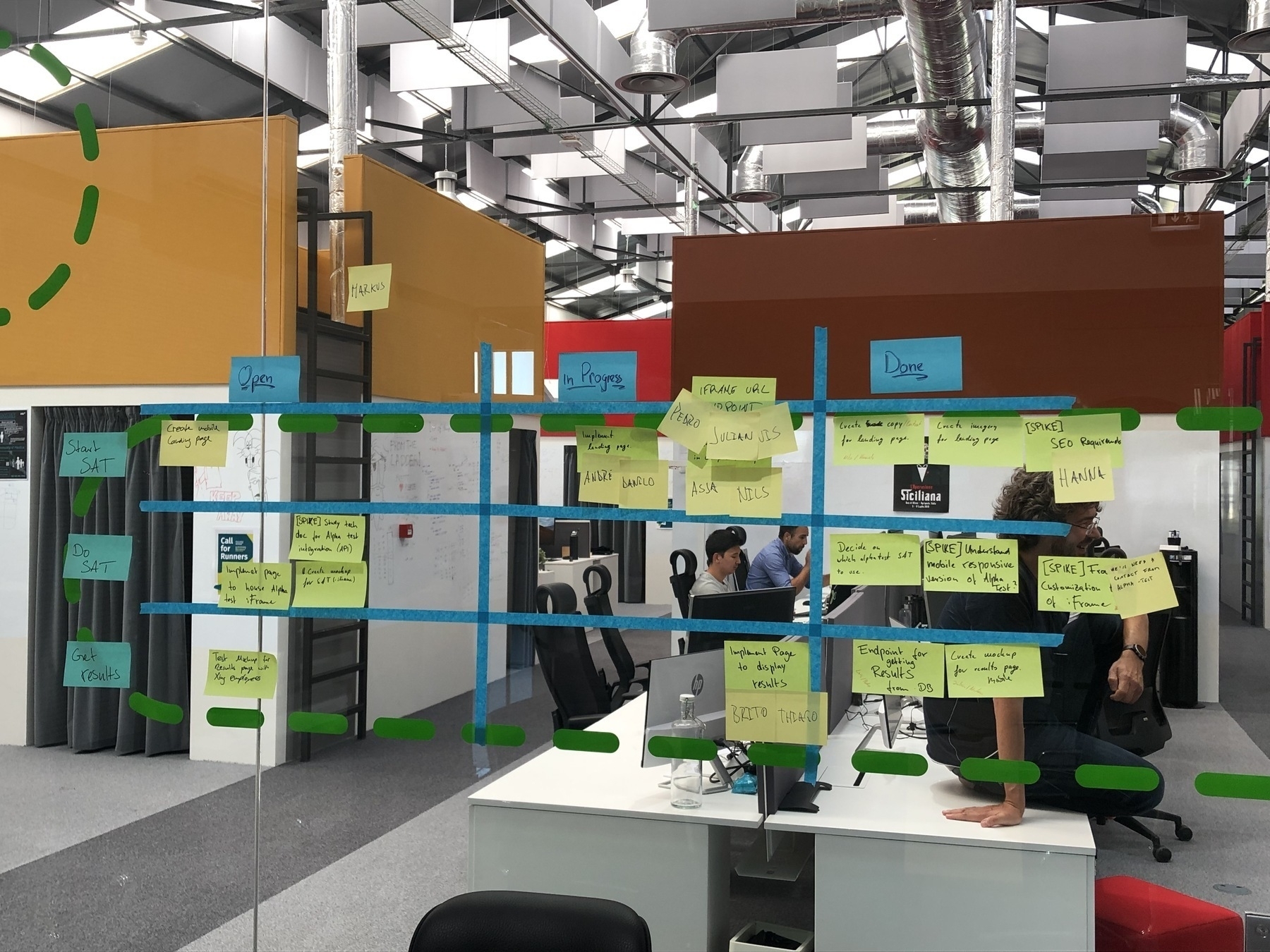

Do you spot our 4 colleagues from 🇵🇹 Porto who joined remotely?

Image by

Image by  Image by

Image by

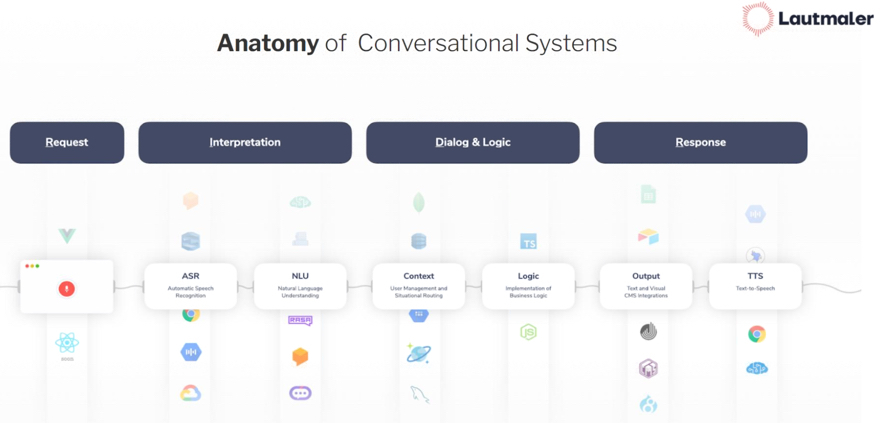

(ASR Automatic Speech Recognition) –> (NLU Natural Language Understanding) –> Context User Management and Situational Routing) –> (Logic Implementation of Business Logic) –> (Output Text and Visual CMS Integration) –> (TTS Text-to-Speech)

(ASR Automatic Speech Recognition) –> (NLU Natural Language Understanding) –> Context User Management and Situational Routing) –> (Logic Implementation of Business Logic) –> (Output Text and Visual CMS Integration) –> (TTS Text-to-Speech)